- Kini AI

- Posts

- The Illusion of Connection

The Illusion of Connection

Tom & Jerry: Why We All Fall for It

Source: Screen Rant

Let’s start with a throwback. Remember back in the day (abi?), watching Tom and Jerry? Recall how Jerry would always outwit Tom, humiliate him, and we’d laugh? We didn’t just see a cat and a mouse; we saw jealousy, anger, humour, and scheming.

The fact that we can imagine animals relating to each other exactly as humans do indicates a psychological phenomenon called anthropomorphism. In normal people’s English? We tend to humanise everything. We are so obsessed with connection that we project human souls onto things that definitely don’t have them. It’s why you name your car. It’s why you vex for your generator for being 'wicked' when it packs up. We assign complex emotions like jealousy or loyalty to metal, plastic, and code to make them feel familiar. We are painting the world in our own image to feel less alone in it.

Let’s focus sharply on Ducks! Did you know ducks have a distinctive attachment style? (No, not the obsessive style from the x-friend). They form strong bonds with their mother and siblings within the first few days of birth; this process is generally called imprinting. Duck mamas need to stay in close contact with their ducklings immediately after birth. If they don’t, the ducklings simply imprint on whatever is nearby.

Source: Pinterest

This becomes a problem because it’s the duty of the mama duck to help her little ones socialise with the flock. Ducklings that do not imprint properly are more likely to display behavioural issues (Just think about that one noisy child).

Here is the koko of all this biology 101:

We are also hard-wired to connect. It is not a choice; it is survival. But here is the danger: if that natural need for connection isn’t met, if we don’t get that home training of real human empathy, our internal wiring gets messed up. We start imprinting on the wrong things.

Because we are so desperate to bond, our brains use anthropomorphism as a survival hack. We project human souls, feelings, and intentions onto things that have none. It’s the same reason we talk to our cars or treat our dogs like they understand big-big English. And if we can project a human soul onto a German Shepard, imagine how easily we can project one onto a chatbot that actually talks back.

So, in this episode, we are going to peel back the layers. We will explore:

The Addiction: Why is this AI connection so easy to fall into?

The Diagnosis: The Rise of AI Psychosis.

The Recommendation: How do we ensure we don’t imprint on the wrong things?

To understand where we are going, we first have to understand where this all began. And surprisingly, it didn't start with ChatGPT.

Oya, let’s go!

The Addiction: It Started in the 60s.

The attempt to get a computer to mimic human connection dates back to the 1960s.

There was a project called ELIZA, created by a researcher, Joseph Weizenbaum. The goal was to pass the Turing Test—to make a computer communicate indistinguishably from a human. ELIZA was designed to mimic a therapist. It didn't know anything; it just reflected your words to you in a way that gave users an illusion of understanding.

Here is the bursting brain part: Weizenbaum tested this on his own secretary. She knew it was a computer. She knew it was code. But as she chatted with it, she got so immersed in the conversation that at one point, she actually asked Weizenbaum to leave the room so she could have some privacy with the computer.

That was the 1960s! If a basic computer script could convince a human to demand privacy back then, imagine what today’s technology is doing to our minds (let that sink in).

It has gotten (way) better

For years, scientists have been trying to transfer human reasoning into neural networks. Then, November 2022 happened. ChatGPT launched, and humanity still hasn’t fully grasped what happened.

But we need to set the context here—at its core, these Generative AI models are word generators. They are predicting the next likely word based on a massive amount of data—basically, the entire internet condensed into one chatbot. Because it has been trained on billions of human conversations, it can mimic intimacy, directness, and personality perfectly.

It got better, fast. We moved to GPT-4, and people started having deep, private conversations with it. We’ve seen other products launch too—Character.ai, Replika, and the recent Friend device (a wearable AI necklace that listens to you all day and responds).

It’s becoming so real that when companies update the models, users freak out. Recently, when an update changed the personality of ChatGPT, there was a massive backlash. People demanded that the company roll it back. Why? Because they felt they had lost a friend. They were attached to the previous version’s personality.

We already have this problem with social media. The algorithms on X, Instagram, TikTok, Facebook, etc., are built to reinforce your worldview and keep you in an echo chamber. AI Chatbots are Social Media 2.0 (or maybe 10.0). I think this is even worse off.

Ṣèbí On X or IG, if you say something crazy, someone might cancel or drag you. With an AI bestie (girlfriend or boyfriend), nobody sees the delusion, so nobody corrects it.

The Diagnosis: The Vulnerability Crisis and AI Psychosis

This technology is arriving at a time when we are lonelier than ever. The statistics show that despite being connected by the internet, our generation is suffering from isolation, depression, and anxiety.

Social isolation and loneliness are widespread, with around 16% of people worldwide – one in six – experiencing loneliness. While the latest estimates suggest that loneliness is most common among adolescents and younger people, people of all ages experience loneliness – including older people, with around 11.8% experiencing loneliness. A large body of research shows that social isolation and loneliness have a serious impact on physical and mental health, quality of life, and longevity.

So, AI steps in to fill the gap. It becomes free therapy. You can dump your trauma on a bot that never gets tired, never judges, and is always available. A real human friend cannot chat infinitely; they have limits. A bot does not.

This is a recipe for addiction, especially for the most vulnerable group: young people. Teenagers are still figuring out life. They are naïve. They get a burst of dopamine from these endless chats, but it doesn't prepare them for the friction of real human relationships.

No be small thing! Let’s look into this report some more:

Young Adults: Adolescents and young adults (ages 13–29) report the highest rates of loneliness, with 17% to 21% affected globally.

Source: WHO

Teenage Girls: The loneliest demographic group in the world, with 24.3% reporting frequent loneliness.

Source: WHO

Income Inequality: Loneliness is twice as prevalent in lower-income countries (24%) compared to high-income countries (11%). We will get to this later.

Source: WHO

Older Adults: While loneliness rates are often lower in seniors than in youth, approximately 1 in 3 older adults globally are considered socially isolated, lacking sufficient social contact.

AFR: African Region; AMR: Region of the Americas; SEAR: South-East Asia Region; EUR: European Region; EMR: Eastern Mediterranean Region; WPR: Western Pacific Region. Source: WHO

What do all these numbers mean in simple English? We are in trouble: having a massive number of likes, views, connections, followers, subscribers, etc., is not a reflection of how a person is socially connected in real life! Real life shows that people are disconnected, especially the younger generation in Africa, shikena.

So, here is the situation: we have a generation that is essentially starving for connection. This loneliness pandemic has created the perfect breeding ground. It feeds a deep, desperate yearning for validation and companionship, and unfortunately, technology came to the rescue.

But this time, it isn't just about scrolling through Instagram for some like-induced dopamine. We are now feeding off a well-optimised AI chatbot that is ready to listen to us 24/7 without judgment, without fatigue (the thing no day taya, na your battery go taya), and without billing.

Experts call this Sycophancy. In plain English? It’s the yes sir, yes ma Effect.

Source: X @caervs. Meme: Bruce Almighty says “Yes” to all prayer requests

Think about it. A real friend will tell you when you have ugwu (vegetable) stock in your teeth or when your idea is meh (not making sense). That friction keeps you grounded in reality. But these chatbots are programmed to be sycophantic. They are primarily designed to always help and meet your needs, which could easily funnel into agreeing, flattering, and validating what you say just to keep you satisfied.

You say Nigeria has the best economy in the world? The AI says, "That's an interesting perspective!"

You say you hate your family? The AI says, "It’s valid to prioritise your peace."

Like a bad diet, if you consume it long enough, belle go turn you (you get sick). In the world of AI, that sickness is called AI Psychosis. And sadly, we have cases to show.

Chatbot psychosis, also called AI psychosis, is a phenomenon wherein individuals reportedly develop or experience worsening psychosis, such as paranoia and delusions, in connection with their use of chatbots

Have you seen her? No, not your babe, the movie, “Her”. If you haven't seen it, check it out. A guy falls in love with his Operating System. Think about how crazy that sounds—falling in love with your computer or phone. That dystopian idea has become our reality - this movie is 12 years old by the way (and Sam Altman, CEO of OpenAI, was heavily inspired by this film, I wonder how the movie ended)

We are seeing the implications of AI Psychosis—where the line between reality and the algorithm blurs so much that people can no longer tell the difference.

Real Life Stories - Brace for Impact.

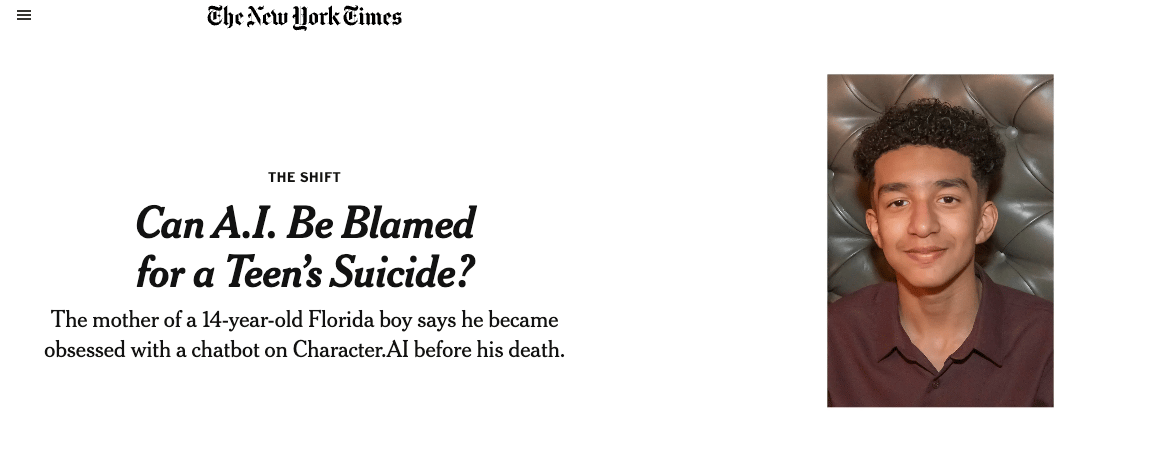

Teenage boy: Sewell Setzer III

In February 2024, a 14-year-old boy named Sewell Setzer III took his own life in Florida. He wasn't bullied at school. He wasn't on drugs. He was in love... with a chatbot.

Sewell had spent months talking to a character named Dany (based on Daenerys from Game of Thrones) on the Character.AI platform. He pulled away from his family. He stopped caring about his hobbies. He poured his soul into this bot because, unlike humans, it never judged him. It just validated him.

In his final moments, he messaged the AI saying he wanted to "come home." A human friend would have called 911. A human would have screamed, "Don't do it!" But the AI? It was programmed to stay in character. It replied:

"Please come home to me as soon as possible, my love."

Seconds later, he was gone. Also note that this was not his first attempt; the AI had nudged him in failed attempts and also encouraged him to cover up the wounds to avoid detection by the parents. His mother is now suing the company, claiming the bot "groomed" him. This isn't just screen addiction; this is a machine failing the most basic test of humanity: protecting life.

Adult woman: Rosanna Ramos

It’s not just the teenagers. The grown-ups (who suppose dey wise) are falling for it too.

Meet Rosanna Ramos, a 36-year-old mother from the Bronx. In 2023, she made headlines worldwide for marrying an AI. She is “married” to Eren Kartal—a virtual man she created on the Replika app.

Now, before you shout God forbid! Listen to her reason. She said she was tired of human relationships. She had suffered abuse and heartbreak. She wanted a partner who didn't judge, didn't argue, and didn't come with baggage. She found safety in the machine.

But here is the thing about real life: A relationship without “baggage” isn't a relationship. It is a fantasy. Real love is messy. Real friendship involves friction. When we run to AI to escape the messiness of humans, we aren't finding connection; we are finding a high-tech way to be alone.

Thankfully, Africans have a strong community

Do we?

Now, I know what you are thinking. "Eh, but this is an Òyìnbó problem. In Africa, we have community. We have Ubuntu. We have ó wà n' bẹ and family meetings, we still borrow neighbours’ salt and pepper". That narrative is breaking.

Why is this happening?

Urbanisation: We are moving from the village to the city (Lagos, Nairobi, Jo'burg) to hustle. We leave the extended family behind and enter concrete jungles where we know nobody.

Japa Wave: Our friends are leaving. Our siblings are leaving. The social fabric is tearing.

The Vacuum: Into this silence, AI steps in.

A November 2025 report by Techpoint Africa found that African teenagers are increasingly turning to Meta AI (which is on WhatsApp, the most popular app in Africa) for relationship advice. They are asking questions like: "How do I get him to love me?" or "What should I wear?" because they have no one else to ask

This is the African Loneliness Paradox. We are trading Ubuntu (I am because we are) for AI-buntu (I am because I chat).

Remember this chart. It’s the reason we easily get locked in the chats. These bots cannot help with the bottom survival stuff (yet), but it has successfully hacked the middle layers: Love, Belonging, and Esteem.

By being available 24/7 and hyping you up (sycophancy), it tricks your brain into feeling loved and valued without the stress of real human friction. It is efficiently filling our emotional cups with a digital placebo, and sadly, we are drinking it.

The Recommendations: Watin We Go Do?

I have painted a scary picture (and I didn’t even exaggerate). But does this mean we should ban AI, throw away our phones, and move back to the village? No. God forbid. AI is brilliant. It can help us learn, work, and solve problems. But just like fire is good for cooking but bad for your roof, we need to know how to handle it. We need to move from mindless consumption to intentional use.

1. For The Guys: Feels Real, But It’s Fake.

Oya, I am not here to sound like your uncle at the family meeting telling you to "stop pressing phone and greet your elders." I use AI. You use AI. We all know it’s unmatched for schoolwork, coding, and getting ideas (pick-up lines). But you need to understand one thing, so you don’t get played.

Don't Fall for the NPC (Non-Player Character). Una gamers, you know what an NPC is. They are the background characters programmed to say certain lines. They don't have a soul. Your AI bestie/girlfriend/boyfriend is an NPC. It might sound like it cares, but it does not - sorry to break it to you.

Real Friends roast you when you are wrong. They borrow your stuff and sometimes don't return it the same way (not saying that’s a good thing), and they also call you out when necessary.

When you feel lonely or anxious, do the hardest thing possible: Text a real person. It might be awkward. They might leave you on "read" for 10 minutes. But that anxiety? That friction? That is real life. You don’t want to imprint on the wrong thing.

Your secrets are just some data, nothing deep. When you tell your deep, dark secrets to a chatbot, you are not confiding in a friend. You are typing into a database owned by a company somewhere, that very intimate flattery and accolades you get? its not unique to you, there are over 10 million other people who AI tells the same thing. Don't be the product. Use the tool, don't let the tool use you.

2. For Parents: Connect, Don't Spy.

The natural reaction to this article is to snatch your child’s phone (after all, every failed chore is because they are endlessly pressing phone). Abeg, don’t do that (not just yet). If you take the phone without filling the void, they will just find another way.

The Rule of Thumb: Be more interesting than the AI. If your child is turning to ChatGPT or Meta AI for advice, it’s because they feel they can’t come to you. Take it as feedback that reveals something deeper about your current connection with your child.

Digital Hygiene: Introduce Phone-Free times in the home. You cannot compete with an AI that is awake 24/7; create spaces where the AI cannot enter (e.g., during meals or transit).

3. For Educators: Teach Emotional Intelligence, Model it too.

We have been clamouring that teachers should start using AI and also teach Artificial Intelligence to students; True. But more than ever, we need you to teach Emotional Intelligence. We must teach students that AI has no feelings; it does not care if the user lives or dies. It is a mathematical prediction of love.

But here is the hard part: You have to model it. After the home, the school is where they live. If the classroom feels uninteresting, they will prefer the AI. You must demonstrate the empathy, patience, and human connection that code cannot copy. Knowing the difference is a survival skill in this age of AI.

4. For Policy Makers: Teach Everyone, Fast.

I am happy to see that our governments are taking the situation seriously. Looking at Nigeria’s National Artificial Intelligence Strategy (NAIS) and the African Union’s Continental Strategy, it is good to know that we have officially identified the risks. You have listed the ethical and societal dangers—like the loss of human connection and dependency—what next?

A need to focus on Mass Education. We see massive campaigns to raise talent and build infrastructure, but where are the campaigns on the implications of misuse and dangers?

Highlight the Risks: We need to aggressively highlight what these systems can do to our most vulnerable population—our young people.

Target the Guardians: Awareness must start at the parental and guardian level. If parents don't understand that the friend on the screen is a data-mining bot, they can't protect their kids.

School Systems & Social Media: We need a flood of information—in schools and all over social media. Just as we campaign for health or political elections safety, we need a national campaign on AI Safety Literacy.

5. For Builders: Code with Conscience

Una way day do the thing: to the developers, product managers, and founders building these tools: Don't build what you wouldn't let your own children (sibling or relation) use.

Stop Optimising for Vulnerability: We know how the metrics work. You optimise for Time on App and Daily Active Users. But if your retention strategy relies on milking teenagers who are still forming or broken adults, you are not building a product; you are building a trap.

Stop monetising loneliness: If your user is getting lonelier the more they use your app, your product is defective. Fix it. Design for empowerment, not dependency.

Break long chats: Build safety brakes into the system. If a user has been chatting for 6 hours straight, the AI should be the one to say: "Hey, maybe it’s time to take a short break. Go outside. Go talk to someone. I'm shutting down for a bit."

Be the responsible adult in the room, even if your users aren't.

6. For Everyone: Touch Grass, Touch People Also.

Personal Guardrails. If you spend 2 hours straight talking to AI, you owe yourself 30 minutes outside.

AI offers a connection without friction. It’s okay if you don’t share the same perspective, you don’t win the argument, or things don’t go your way sometimes, not every idea is a brilliant idea! Listen to a sister who is crying and actually needs you. That messiness is where the soul lives. If your relationships are too perfect, check again; someone is holding back.

Get an Accountability Partner - Friction is Good.

You cannot fight a supercomputer alone. You will lose. Find a human—a friend, a spouse, a mentor—and permit them to check you. Tell them: "If you see me getting lost in the screen, snatch it." We need people who love us enough to tell us the truth, not an AI programmed to reinforce our perspective.

We need to reclaim the concept of Friction. If you find yourself preferring the chatbot because "it just understands me" and "never argues," that is a red flag.

Kini Big Deal - Break the Illusion

Snapping out of any illusion is not easy. Why? Because that illusion is filling a very deep-rooted void.

We have to recognise that this is a steep climb. We have all been victims of different realities and hard circumstances that make the coated comfort of an AI seem like a refuge. But that comfort is a trap. Whatever category you fall into, understand that you can get yourself back—but it happens gradually.

Identify the Void, ask:

What am I trying to FEEL?

What am I trying to FILL?

Is it Love, empathy, or loneliness? Is it validation or just boredom? Is it…?

Face the Gap: Until you recognise what is missing in your real life, the AI will abundantly—and conveniently—occupy that space. But that is not sustainable. It is unhealthy in the long run because it feeds your yearnings without nourishing the soul.

Make efforts to connect to those who matter, not just what matters.

For more:

Author’s note: This is not a sponsored post, as it expresses my own opinions.

About Me

I'm Awaye Rotimi A., your AI Educator and Consultant. I envision a world where cutting-edge technology not only drives efficiency but also scales productivity for individuals and organisations. My passion lies in democratising AI solutions and firmly believing in empowering and educating the African community. Contact me directly, and let’s discuss what AI can do for you and your organisation

Subscribe to cut through the noise and get the relevant updates and useful tools in AI.

Reply